In the last few months, an artificial intelligence tool called ChatGPT has taken the world by storm. The new bot can perform an overwhelming number of jobs, from having candid conversations with you about the meaning of life to writing an essay for you to helping you locate the best bolognese recipe online.

Every day, it seems, someone reports a new skill that ChatGPT can perform on your behalf — but what are the implications of an AI tool this smart? And for that matter, is this bot really that smart, or are there chinks in its armor?

Here’s the lowdown on how ChatGPT works, why it’s causing a wide range of responses from AI experts and researchers, and more.

How ChatGPT works

Imagine you have an online pen-pal, one who is awake 24/7 and who has a brain that contains all the information currently available on the internet at any given moment. Imagine that this pen-pal gets smarter and more attuned to what you need the more information you give it about yourself, and can help you with a wide range of tasks, from writing up a grocery list to completing the annoying parts of your job.

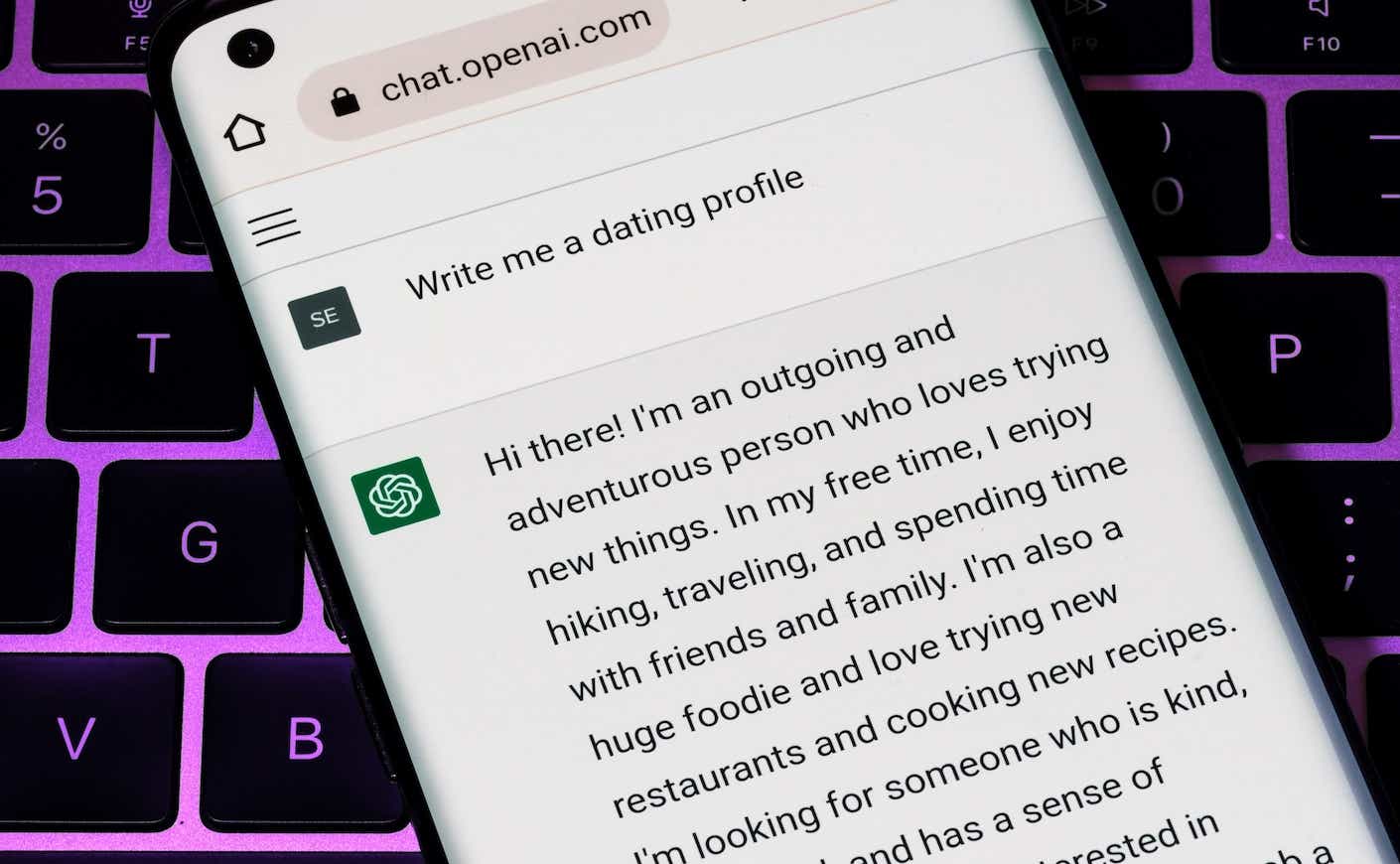

This is how ChatGPT works. On a technical level, ChatGPT, also known as Chat Generative Pre-Trained Transformer, is described as a “chatbot” or a “large language model.” The product was developed and released by OpenAI, an independent organization with the mission of “ensuring that artificial general intelligence benefits all of humanity.”

ChatGPT launched late last year, and its arrival has already caused quite a stir. The chatbot has gone viral again and again as people continue to show just how vast its capabilities are.

In one instance, a journalist submitted her childhood journal entries to ChatGPT, and trained the bot so that she was basically “speaking to her inner child.” In another instance, a high school teacher lamented how common it has become for students to submit essays that he suspects have been written by ChatGPT.

To use ChatGPT, you simply create a free account with OpenAI, then start typing questions and receiving answers. Each time you ask the bot something or tell it something, it uses an algorithm to source all of the information available on that topic (which is basically all information currently in existence) to provide you with a response.

Engaging with the bot is so open-ended that it might feel overwhelming to start — but once you get going, you’ll quickly get the hang of it. Hence, its viral nature.

People are using ChatGPT to take care of errands, negotiate with internet providers, write blog posts, and more. This all seems fun and dandy (people have recently become enamored with asking ChatGPT to explain complex concepts to them as if they were five, for example) but there is, as you might expect, a pretty big catch.

In fact, there are a few.

Why ChatGPT is scary or even dangerous, according to some experts

Fans of ChatGPT have hailed the technology as the next step toward a world where humans live meaningful, uncluttered lives. A world where people no longer spend hours of their precious time doing busy work like writing lists, paying bills, and completing mundane tasks at work.

But critics of ChatGPT fear this technology is headed in an entirely different direction — and the stakes couldn’t be higher.

One major concern about the chatbot is the potential for it to spread misinformation faster than any one human could. To The New York Times, Jeremy Howard, an artificial intelligence researcher, explained what that might look like in real-time.

“You could program millions of these bots to appear like humans, having conversations designed to convince people of a particular point of view,” he said. “I have warned about this for years. Now it is obvious that this is just waiting to happen.”

Others have noted a slightly ironic concern about the chatbot: That some people might think it’s a bit more brilliant than it actually is.

“First and foremost, ChatGPT lacks the ability to truly understand the complexity of human language and conversation,” Ian Bogost, a contributing writer at The Atlantic, wrote. “It is simply trained to generate words based on a given input, but it does not have the ability to truly comprehend the meaning behind those words. This means that any responses it generates are likely to be shallow and lacking in depth and insight.”

Surprisingly, the CEO of OpenAI seemed to confirm this belief recently. In a tweet, he seemed to caution others against expecting too much of the technology, or assuming it’s more sophisticated than it actually is.

“ChatGPT is incredibly limited, but good enough at some things to create a misleading impression of greatness,” he said. “It’s a mistake to be relying on it for anything important right now. It’s a preview of progress; we have lots of work to do on robustness and truthfulness.”

Of course, one of the biggest fears about the chatbot is the one that also seems completely inevitable: the technology is poised to replace certain jobs that humans previously performed, like customer service or copywriting, to start.

The implications of this, if you think about it for too long, might just make you dizzy.

Can you tell if ChatGPT has written something instead of a human?

Is it possible to know if something has been written by ChatGPT instead of a human? In some circumstances, yes — but you’ll need the proper tools.

Recently a student at Princeton University spent his holiday break writing the code for an app that would detect whether or not an essay has been written by a chatbot. Edward Tian, a computer science student, said he was motivated to write the app after seeing widespread concerns about plagiarism in the classroom.

“Are high school teachers going to want students using ChatGPT to write their history essays? Likely not,” he tweeted.

It’s possible to download GPTZero, the app he built to detect ChatGPT, but the extreme interest in Tian’s product has caused the site to crash again and again. It seems all but guaranteed, though, that as bots like ChatGPT become more commonly used, more and more technology will be developed to detect it.